Last week's piece on the ad-cloning machine tapped into something.

Thousands of new subscribers. Hundreds of DMs. Convos with people I've looked up to for years (and stay tuned for some big announcements on that!).

But I kept thinking about one thing:

"The ad system is incredible. But what about video?"

You can't screenshot a viral TikTok and hand it to a designer. So founders try to shoot it themselves, ring light, bad acting, three hours for something that looks like brand content the moment it hits the feed.

Here's what they're missing:

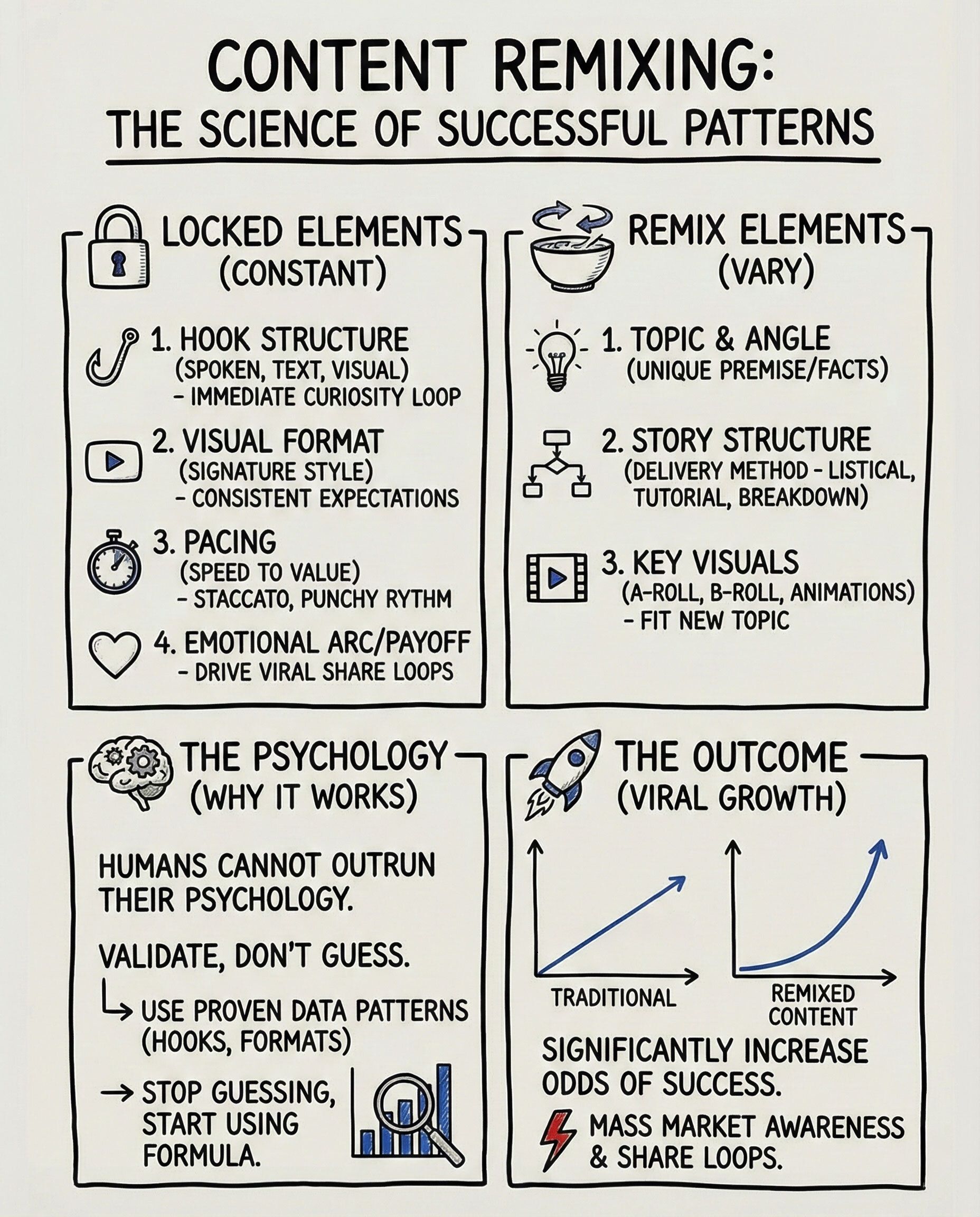

The goal isn't to copy viral videos. It's to hold the winning components constant and remix the rest.

Take the elements that made it work - the hook structure, the visual format, the emotional arc, the pacing. Keep those locked. Then vary everything else: the angle, the topic, the story, the brand signals.

That's systematic remixing. And you can do it at scale.

So I built an AI that does exactly that.

I'm giving away the entire workflow. The n8n files. The prompts. Everything.

Here's the system.

Shoppers are adding to cart for the holidays

Over the next year, Roku predicts that 100% of the streaming audience will see ads. For growth marketers in 2026, CTV will remain an important “safe space” as AI creates widespread disruption in the search and social channels. Plus, easier access to self-serve CTV ad buying tools and targeting options will lead to a surge in locally-targeted streaming campaigns.

Read our guide to find out why growth marketers should make sure CTV is part of their 2026 media mix.

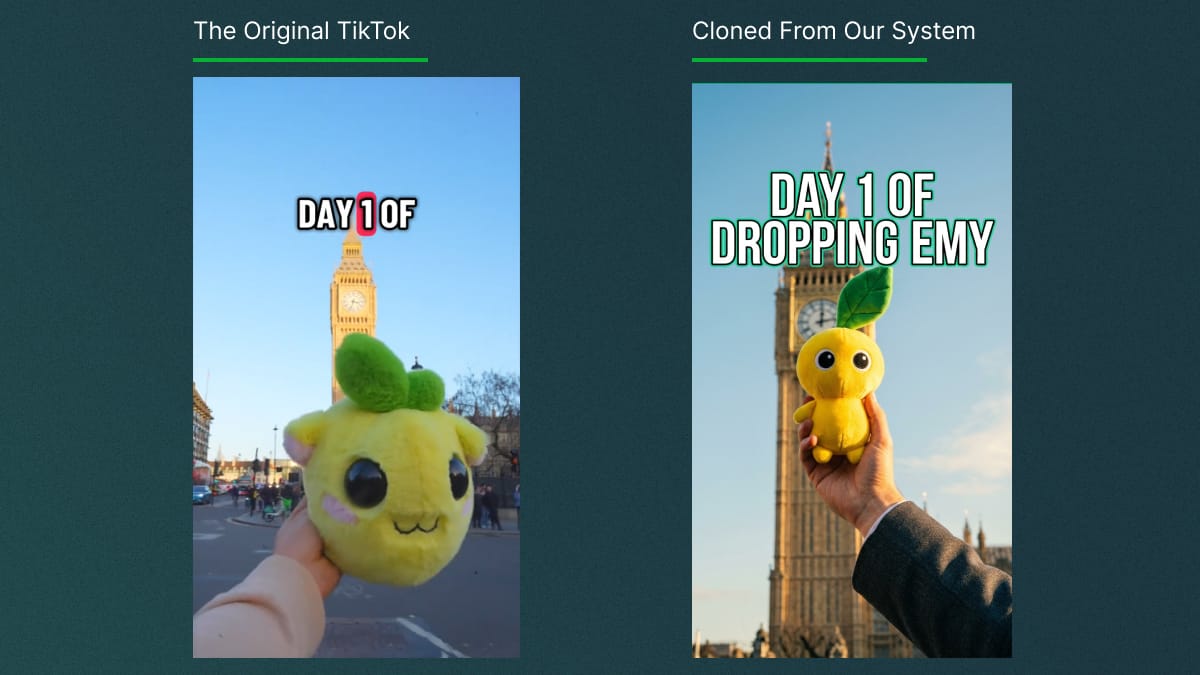

I call it Viral Twin because the output preserves the psychological fingerprint of what worked while generating something visually distinct.

Paste a TikTok URL. Wait 3 minutes. Get a branded twin that shares psychological DNA with the original

Most AI prompts are pretty basic: "Create a video of someone in a kitchen talking to camera." The model has infinite degrees of freedom and it picks the most average, middle of the road, path. You get generic slop.

Viral Twin uses constraints. The system first decodes why the original worked at a psychological level:

What emotion does it trigger in the first 1.5 seconds?

Where does your eye go first, second, third?

What's the exact camera angle, lighting direction, composition grid?

When does the pattern interrupt hit relative to the audio?

What's the promise, payoff, and surprise structure?

Result: Same viral mechanics. Completely different visuals. Your brand, not their knockoff.

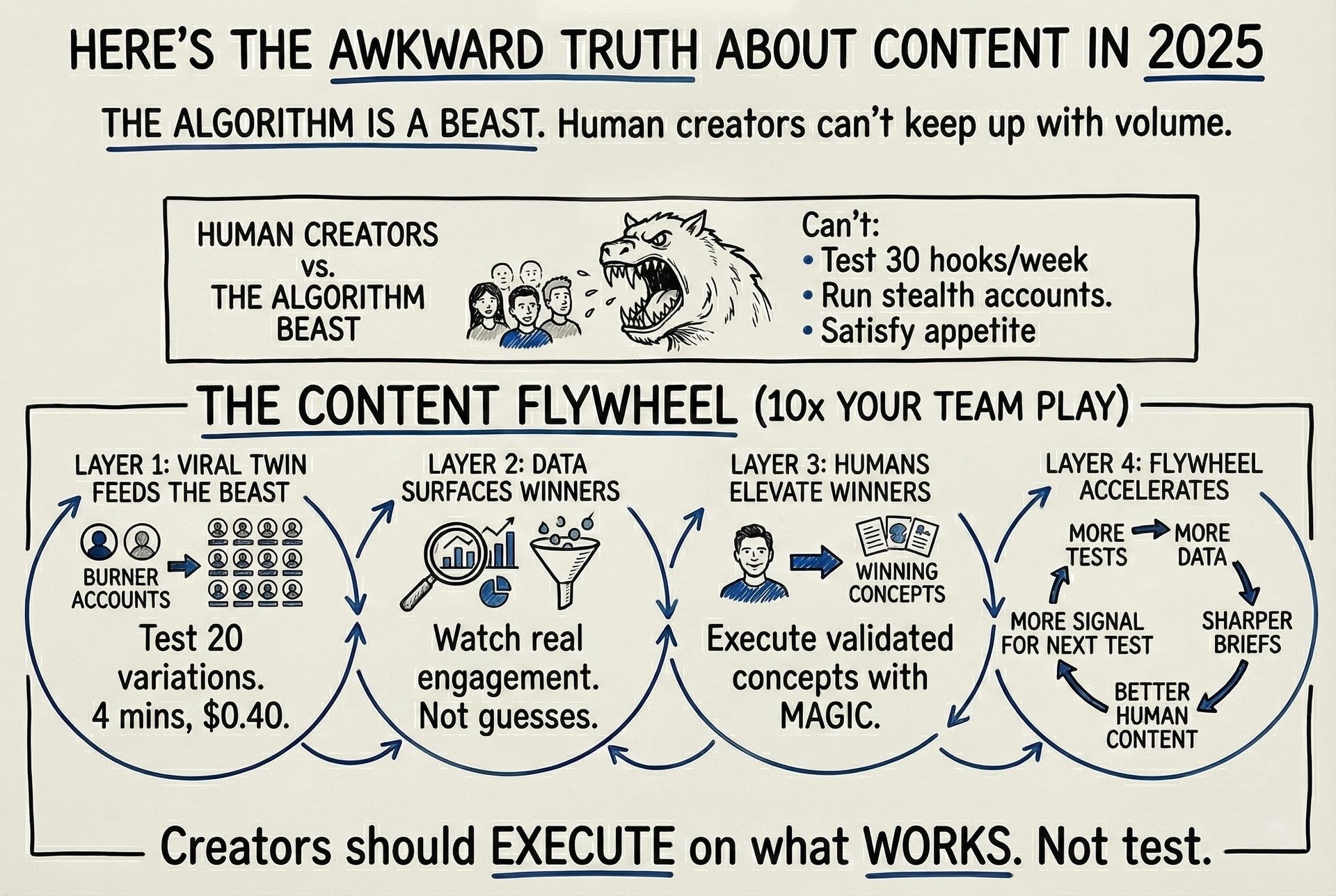

The Volume Problem

Here's the awkward truth: the algorithm demands more volume than any human team can sustainably produce.

Your best creators (the ones who get it) can't test 30 hook variations per week, run stealth accounts, and feed a dozen platform formats simultaneously.

This isn't "replace your team." It's "10x your team."

The flywheel:

Viral Twin feeds volume — Test hooks and angles across burner accounts. 20 variations while competitors run 2. Cost: $0.40 each.

Data surfaces winners — See what actually gets traction, not what the team debated for 45 minutes.

Creators elevate proven concepts — "This hook gets 3x holds. Now make it hit." They're executing on validated concepts, not guessing.

Nothing replaces a creator who feels it. But that creator shouldn't burn hours on hook variations. They should execute on the one you already know works.

The 4-Stage Pipeline

Stage | What Happens | What It Unlocks |

|---|---|---|

CAPTURE | Paste TikTok URL, fetch video data and playback | Access to competitor content without manual downloading |

DECODE | AI watches full video with audio, identifies hook moment, peak moment, virality mechanism | Understand why it worked, not just what it shows |

EXTRACT | Pull key frames, run visual DNA analysis on composition, lighting, objects, colors | The psychological fingerprint you're transplanting |

REBUILD | Generate new image preserving psychology + minimal brand signals, then animate | Branded twin ready to post |

Total runtime: 3-5 minutes from URL to finished asset.

What the AI Actually Sees

The workflow runs two models in sequence:

Model 1: The Vibe Check (Gemini 3 Pro)

Watches the full video with audio. Returns structured data:

Hook timestamp: 00:01.200

Hook element: Direct eye contact with knowing smirk

Peak timestamp: 00:08.500

Peak element: Unexpected product reveal with genuine reaction

Virality mechanism: Pattern interrupt via misdirection—viewer expects complaint, gets solution

Audio energy: Calm buildup → sharp contrast at peakThis is the "why it worked" in machine-readable form.

Model 2: Visual Forensics (VisionStruct)

Analyzes extracted frames pixel-by-pixel:

Every object identified and located

Color palette with exact hex values

Composition grid (rule of thirds, leading lines, focal weight)

Lighting direction and quality

Text/overlay positioning

This is the DNA sample that gets transplanted.

The Stack

Component | Tool | Cost |

|---|---|---|

Orchestration | n8n (self-hosted) | Free |

TikTok Data | ~$20/mo | |

Video Analysis | Gemini 2.5 Pro via OpenRouter | ~$0.02/video |

Frame Extraction | FAL FFmpeg API | ~$0.01/video |

Image Generation | Gemini 3 Pro Image via OpenRouter | ~$0.05/image |

Video Generation | Kling 2.6 Pro via FAL | ~$0.30/video |

Storage | Google Sheetyhgts | Free |

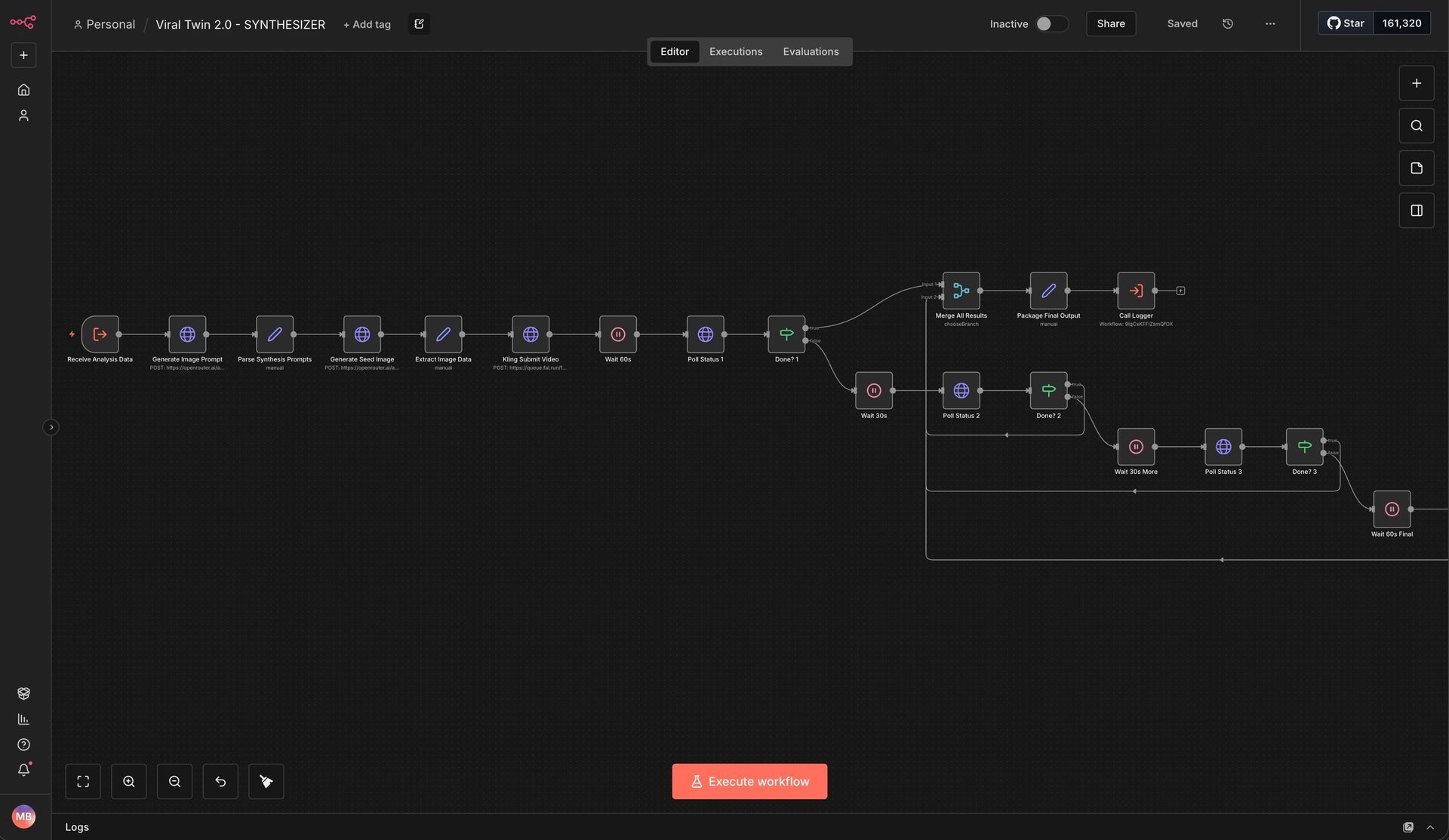

The Architecture

FORM INPUT → ANALYZER → SYNTHESIZER → LOGGER

↓ ↓ ↓ ↓

TikTok URL AI Vision Image Gen Google Sheets

+ Audio + VideoFORM INPUT — Paste URL, validate it's real, fetch the video data from TikTok. 9 nodes.

ANALYZER — Gemini watches the video and identifies the hook/peak moments. FAL extracts key frames. VisionStruct runs pixel-level forensics on composition, lighting, objects. 16 nodes.

SYNTHESIZER — Takes the analysis, generates a constraint-based image prompt, creates the seed image (Nano Banana pro), then animates it to video (Kling 2.6). 19 nodes.

STITCHER - takes the video gens you created and stitches them together with FFMPEG. 22 nodes.

LOGGER — Writes everything to a Google Sheet with the original URL, virality analysis, and final video link. 4 nodes.

Get the Workflow

I'm packaging Viral Twin as an n8n template. Same 70 node system I'm running.

I'm giving you all five workflow files. No catch.

Download the complete 5-part automation → Google Drive or Github

If this gave you an edge, forward it to one person who would benefit.

🎁 Get rewarded for sharing! I’ve scraped and distilled every lesson from the entire AppMafia course (and built a custom AI trained on it all). This means you get the exact frameworks, insights, and viral strategies behind their $36M empire.

Invite your friends to join, and when they sign up, you’ll unlock my App Mafia GPT.

{{rp_personalized_text}}

Copy and paste this link: {{rp_refer_url}}